AI in penetration testing uses artificial intelligence to help ethical hackers find vulnerabilities, analyze risks, and prioritize security issues faster. It automates repetitive tasks while human experts focus on validation and real-world attack scenarios. As systems change continuously, AI helps organizations move from occasional testing to more proactive and continuous security practices.

Penetration testing is the process where security professionals simulate real cyberattacks to identify weaknesses in systems before malicious attackers can exploit them. The purpose of penetration testing is to discover vulnerabilities early, understand how they could be misused, and help organizations strengthen their security before real incidents occur. In modern environments, AI in penetration testing is increasingly supporting security teams by helping analyze findings faster and prioritize risks more effectively while keeping human validation central to the process.

As technology environments become more complex and systems change more frequently, the way penetration testing is performed is also evolving. Earlier, security testing was done periodically because systems changed slowly. Today, applications are updated weekly, cloud systems scale automatically, and new integrations are added constantly. This means vulnerabilities can appear at any time, which is why organizations are moving toward faster, automation-supported and more continuous testing approaches. This shift is shaping the future of penetration testing and ethical hacking practices, where AI-powered penetration testing supports more proactive and continuous security models.

Why AI in Penetration Testing Is Becoming Essential

Traditional penetration testing has always been effective when systems changed slowly and infrastructure remained relatively stable. Security teams would conduct assessments at scheduled intervals, identify vulnerabilities, and recommend fixes. However, modern technology environments no longer operate at that pace. Applications are updated frequently, cloud resources scale dynamically, and new integrations are introduced continuously. As a result, security risks can appear soon after a test is completed, making periodic testing alone insufficient.

Testing Happens Periodically, but Systems Change Continuously

Conventional penetration testing is typically performed at fixed intervals. Once testing is completed, results represent the security state of that specific moment. In modern environments where deployments and configurations change frequently, new vulnerabilities can emerge soon after testing ends. This creates gaps between assessments where risks may remain unnoticed.

The Attack Surface Has Expanded Significantly

Organizations now operate across cloud platforms, APIs, mobile applications, third-party integrations, and remote access environments. Every connection introduces a new potential entry point. Mapping and testing all these components manually becomes increasingly difficult as infrastructure grows in size and complexity.

Too Many Alerts, Not Enough Clarity

Modern security tools generate large volumes of alerts and vulnerability findings. While automation helps detect issues, many findings are low risk or not practically exploitable. Security professionals must spend significant time validating results, which can delay attention from genuinely critical vulnerabilities.

Prioritizing Real Risk Is Difficult

Not every vulnerability presents the same level of danger. The real challenge lies in understanding which weaknesses can realistically be exploited and what impact they may have on business operations. Traditional testing often produces long lists of findings without enough context for effective prioritization.

For example, a vulnerability scanner may report hundreds of issues, but only a few may actually allow an attacker to gain access to sensitive data or move deeper into a system. Security teams often spend time reviewing low-risk findings while critical issues remain hidden. AI helps reduce this gap by identifying which vulnerabilities are more likely to be exploited in real-world attack scenarios.

Human Capacity Has Natural Limits

Penetration testers bring creativity and expertise, but they also work within time and cognitive limits. Manually analyzing large datasets, testing multiple attack paths, and continuously monitoring changing environments is difficult to sustain at scale using human effort alone.

Artificial intelligence is being introduced to address these limitations. By analyzing large volumes of data quickly, identifying patterns, and helping prioritize high-risk findings, AI allows penetration testers to focus on validation, strategy, and complex attack scenarios where human judgment remains essential.

AI as an Offensive Tool: How Attackers Are Using AI

Artificial intelligence is changing cybersecurity on both sides. While security teams are exploring how AI can improve defense and testing, attackers are also using AI to make attacks faster, more scalable, and harder to detect. Understanding this shift helps explain why penetration testing itself must evolve.

Automated Reconnaissance at Scale

Before launching an attack, adversaries typically gather information about their target, such as exposed systems, technologies in use, and possible entry points. Traditionally, this required time and manual effort. AI can now automate this process by scanning large numbers of internet-facing assets, identifying patterns, and mapping potential weaknesses quickly. What once took days of research can now happen in minutes, allowing attackers to prepare targeted attacks more efficiently.

AI-Generated Phishing and Social Engineering

Generative AI has significantly improved the quality of phishing and social engineering attacks. Attackers can create realistic emails, messages, and conversations that closely resemble legitimate communication. These messages can be personalized, written in natural language, and adapted to different regions or roles within an organization. As a result, traditional signs of poorly written scams are becoming less reliable indicators of malicious intent.

Faster Vulnerability Discovery

AI-assisted tools can analyze applications, configurations, and codebases at high speed to identify potential weaknesses. By testing multiple combinations and patterns automatically, attackers can discover vulnerabilities faster than through manual exploration alone. This lowers the effort required to launch sophisticated attacks and increases the speed at which new vulnerabilities can be exploited.

Adaptive and Evolving Attack Techniques

Some AI-driven attacks can change their behavior based on how systems respond. For example, if one login method is blocked, the attack may automatically try alternative paths instead of stopping completely. This makes attacks more flexible and harder to predict compared to traditional scripted attacks, increasing the difficulty for defenders trying to detect unusual activity.

How AI Is Changing the Role of Penetration Testers

As artificial intelligence becomes part of security workflows, the role of penetration testers is beginning to shift. Earlier, a large portion of penetration testing involved manual activities such as scanning systems, collecting information, and checking known vulnerabilities. While these tasks remain important, AI is increasingly handling the repetitive and data-heavy parts of the process, allowing penetration testers to focus on higher-level analysis and decision-making.

Automation Reduces Repetitive Work

Many early stages of penetration testing involve tasks that follow predictable patterns, such as asset discovery, vulnerability scanning, and data collection. AI-assisted tools can perform these activities continuously and at scale, reducing the amount of manual effort required. This allows penetration testers to spend less time running routine checks and more time analyzing meaningful findings.

Human Judgment Remains Critical

Penetration testing is not only about finding vulnerabilities but understanding how they can realistically be exploited. AI can identify patterns and potential weaknesses, but it cannot fully understand business context, operational impact, or unusual attack paths created by human behavior. Penetration testers evaluate results, eliminate false positives, and determine which risks truly matter.

Greater Focus on Strategy and Validation

As AI generates more findings automatically, penetration testers increasingly take on the role of validating results and designing advanced attack simulations. Instead of focusing only on discovery, professionals focus on understanding how multiple weaknesses could be combined in real-world scenarios and how attackers might move through an environment after initial access.

Collaboration Between Humans and AI

The future of penetration testing is not automation replacing professionals, but collaboration between intelligent tools and human expertise. AI improves speed and coverage, while penetration testers provide creativity, reasoning, and accountability. Professionals who understand how to guide and interpret AI outputs will be able to perform more effective and realistic security assessments.

In practice, AI is moving penetration testers away from repetitive execution toward analytical and strategic roles, where human insight becomes more valuable rather than less.

CEH v13 AI

Master the art of ethical hacking to identify and neutralize advanced cyber threats using AI-driven tools. Gain hands-on experience in penetrating secure systems to strengthen organizational defenses and protect critical data infrastructure.

Duration: 6 months

Skills you’ll build:

Other Courses

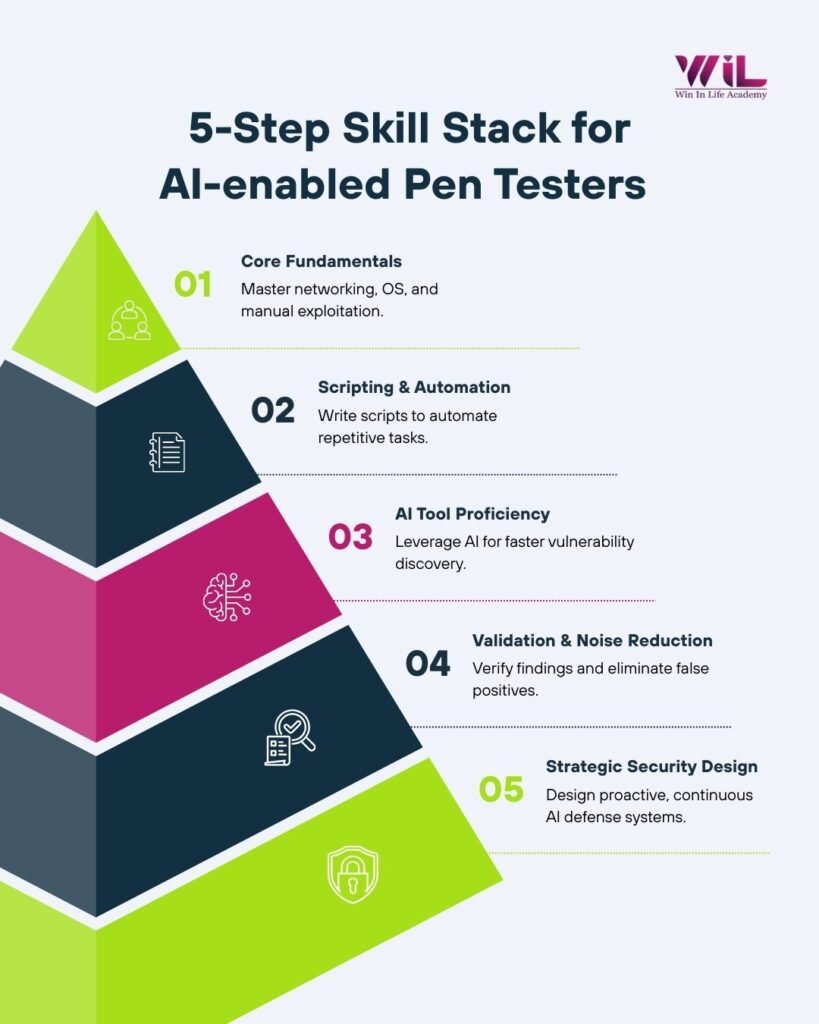

Skills Penetration Testers Need in an AI-Driven Security Landscape

As artificial intelligence becomes part of penetration testing workflows, the skills required for security professionals are also evolving. Earlier, effectiveness often depended on how well someone could operate tools manually. Today, the focus is shifting toward understanding automation, interpreting results, and making informed security decisions based on AI-assisted insights.

Comfort With Automation

Modern penetration testing environments generate large amounts of data that cannot be handled efficiently through manual processes alone. Penetration testers need to become comfortable working with automated workflows that run scans, collect information, and monitor environments continuously. The goal of pentesting automation is not to remove human involvement but to allow professionals to focus on deeper analysis instead of repetitive execution.

Ability to Customize Tools and Workflows

Security tools rarely fit every environment perfectly. Penetration testers who can modify scripts, adjust configurations, or integrate tools into automated workflows are better able to uncover meaningful vulnerabilities. Customization allows testing to move beyond default scanning and reflect real-world attack scenarios more accurately.

Understanding AI-Assisted Outputs

AI-generated findings are powerful but not always correct. Professionals must understand how AI tools reach conclusions, recognize limitations, and verify results before acting on them. Blindly trusting automated output can lead to missed risks or unnecessary remediation efforts. Interpretation and validation therefore become essential skills.

Strong Analytical and Problem-Solving Skills

Running tools is only the starting point of penetration testing. The real value comes from connecting multiple findings, identifying realistic attack paths, and understanding business impact. Analytical thinking helps penetration testers turn technical data into actionable security insight.

Continuous Learning and Adaptability

Cybersecurity evolves rapidly, and AI is accelerating that pace. New attack techniques, tools, and defensive strategies appear constantly. Professionals who maintain curiosity, stay updated with emerging technologies, and adapt to changing workflows will remain effective in an AI-assisted security environment.

In this landscape, technical knowledge remains important, but the ability to think critically, interpret automated results, and adapt to intelligent systems increasingly defines effective penetration testers.

Future of Pen Testing: From Periodic Testing to Continuous Security

Traditionally, penetration testing has been performed at specific intervals, such as once or twice a year, or before major product releases. This approach worked when systems changed slowly and infrastructure remained relatively stable. Today, however, applications are updated frequently, new services are added regularly, and configurations change continuously. Under these conditions, security risks can emerge at any time, making periodic testing alone insufficient.

Artificial intelligence is enabling a gradual shift toward continuous security testing. Instead of testing environments only at fixed points in time, AI-assisted systems can monitor changes, analyze new configurations, and identify potential weaknesses as systems evolve. This allows organizations to detect risks earlier rather than waiting for the next scheduled assessment.

In this model, penetration testers play a more strategic role. Rather than spending most of their time on discovery, they focus on validating high-risk findings, designing realistic attack simulations, and helping organizations understand how vulnerabilities could impact real operations. Testing becomes an ongoing process supported by automation, while human expertise guides decision-making and prioritization.

The future of penetration testing is therefore moving toward collaboration between intelligent systems and skilled professionals. Organizations are shifting from reacting to security incidents after they occur to identifying and addressing risks earlier. As AI continues to improve analysis and coverage, penetration testing is expected to become more proactive, continuous, and closely integrated into everyday security operations.

Challenges and Risks of AI in Pen Testing

While artificial intelligence brings speed and efficiency to penetration testing, its use also introduces new challenges that security professionals must understand. AI improves analysis and automation, but it does not eliminate the need for human oversight or careful validation.

Risk of Over-Reliance on Automation

One of the main concerns is the tendency to trust automated results without sufficient verification. AI tools can generate large numbers of findings, but not all of them represent real or exploitable risks. If penetration testers rely entirely on automated outputs, important c

ontext may be missed, leading to incorrect conclusions or overlooked vulnerabilities.

False Positives and Misinterpretation

AI systems identify patterns based on data, which means they can sometimes flag issues that are not practically exploitable. Security teams must still validate findings and understand real-world impact. Without human review, organiza

tions may spend time fixing low-risk issues while more serious problems remain unresolved.

Data Quality and Model Limitations

AI systems are only as reliable as the data used to train them. If training data is incomplete or outdated, results may be inaccurate or biased toward certain environments. Penetration testers must understand that AI recommendations are probabilistic, not

guaranteed outcomes.

Ethical and Security Concerns

The same AI capabilities that assist defenders can also be misused by attackers. Automated vulnerability discovery and intelligent attack generation increase the speed at which threats evolve. This creates an ongoing challenge where defensive capabilities must continuously adapt to keep pace with offensive innovation.

In practice, AI strengthens penetration testing when used as an assistive tool rather than an autonomous decision-maker. Responsible use requires human validation, clear understanding of limitations, and careful integration into existing security processes to e

nsure accuracy and reliability.

Where Beginners Should Start in an AI-Driven Cybersecurity World

For beginners entering cybersecurity, it is important to understand that AI does not replace foundational knowledge. Before working with AI-assisted penetration testing tools, learners should first understand networking basics, operating systems, web application behavior, and common vulnerabilities. AI becomes useful only after understanding how attacks actually work. Professionals who build strong fundamentals first and then learn how AI supports testing workflows are better prepared for real-world security roles.

For learners who want structured guidance while building these foundations, training programs that combine ethical hacking fundamentals with modern security practices can help reduce confusion and provide practical direction. Programs such as the Ethical Hacking Course offered by Win in Life Academy focus on real-world security concepts, vulnerability assessment, and modern testing workflows aligned with current industry requirements, helping beginners move from theory to practical application.

Conclusion

AI in penetration testing is reshaping how security teams manage the growing scale and complexity of modern technology environments without replacing the need for human expertise. From faster analysis and better prioritization to continuous monitoring, AI enables penetration testers to identify risks more efficiently while allowing human expertise to focus on validation, strategy, and realistic attack simulation.

At the same time, the role of penetration testers is evolving rather than disappearing. Human judgment, creativity, and contextual understanding remain essential for interpreting findings and understanding real-world impact. AI improves speed and coverage, but effective security still depends on professionals who can think critically and make informed decisions.

The future of penetration testing will not be defined by automation alone, but by professionals who can combine technical knowledge, analytical thinking, and responsible use of intelligent tools to strengthen security in an increasingly complex digital landscape.

Frequently Asked Questions (FAQs)

1. What does AI in penetration testing actually mean?

AI in penetration testing refers to the use of artificial intelligence to assist security professionals in scanning systems, analyzing vulnerabilities, and prioritizing risks more efficiently while keeping human validation and decision-making central to the process.

2. How is the future of penetration testing changing with AI?

The future of penetration testing is moving toward continuous and automated security assessment. AI helps organizations monitor systems more frequently, identify risks earlier, and shift from periodic testing to proactive security models.

3. What is automated penetration testing and how is it different from traditional testing?

Automated penetration testing uses AI and automation tools to perform repetitive tasks such as scanning and data analysis. Traditional testing relies more heavily on manual effort, while automated approaches help testers focus on validation and complex attack scenarios.

4. Is AI ethical hacking different from traditional ethical hacking?

AI ethical hacking still follows the same principles of identifying vulnerabilities responsibly, but AI tools assist in faster reconnaissance, analysis, and prioritization. Human expertise remains necessary to interpret findings and simulate realistic attack paths.

5. How does automation in penetration testing improve security outcomes?

Automation in penetration testing reduces time spent on repetitive tasks and allows security teams to analyze larger environments more consistently. This helps organizations identify critical vulnerabilities faster and respond before attackers exploit them.

6. What are AI-powered penetration testing tools used for?

AI-powered penetration testing tools are commonly used for vulnerability discovery, attack path analysis, reducing false positives, and improving threat prioritization by analyzing large volumes of security data.

7. How does machine learning in cybersecurity support penetration testers?

Machine learning in cybersecurity helps identify patterns across large datasets, making it easier to detect unusual behavior, highlight potential vulnerabilities, and support decision-making during security assessments.

8. Can AI help with threat detection during penetration testing?

Yes. AI supports threat detection by analyzing system behavior and identifying patterns that may indicate exploitable weaknesses. However, penetration testers must still validate whether these findings represent real-world risks.

9. What is the role of AI in next generation penetration testing?

Next generation penetration testing combines automation, AI analysis, and human expertise to provide continuous visibility into security risks rather than relying only on periodic assessments.

10. Does AI-driven cybersecurity reduce the need for penetration testers?

No. AI-driven cybersecurity increases the need for skilled professionals who can interpret automated findings, design realistic attack simulations, and make strategic security decisions based on AI-assisted insights.