Ethical hacking with AI means using artificial intelligence, machine learning, and large language models to find security weaknesses faster, simulate real-world attacks, and protect modern systems from AI-driven cyber threats. As attackers use AI to automate and scale attacks, ethical hackers use the same technology to strengthen defenses and ensure AI systems remain secure and reliable.

A decade ago, launching a cyberattack required deep technical knowledge, custom tools, and significant effort. Today, AI has lowered that barrier. Large Language Models can generate scripts, automate reconnaissance, and help attackers move faster with far less expertise. At the same time, this shift has forced ethical hackers to evolve. Modern penetration testing is no longer just about manual techniques. Security professionals now rely on AI, machine learning, and LLMs to detect vulnerabilities faster, simulate real-world attacks, and respond at a scale humans alone cannot manage.

AI has fundamentally changed how both attacks and defense operate in cybersecurity, and ethical hacking is adapting to this new reality. This change is a major reason why AI in ethical hacking is becoming essential for modern security teams.

This blog explores how AI, machine learning, and large language models are changing both cyberattacks and cybersecurity defenses. It examines how attackers use AI to scale attacks, how ethical hackers use AI for testing and defense, and why AI systems themselves must now be tested, monitored, and secured as part of modern ethical hacking.

How Hackers Are Using AI and LLMs

Cyberattacks no longer require the same level of technical skill they once did. AI has made it easier to generate convincing messages, automate research on targets, and speed up parts of the attack process that previously took time and expertise. What used to be slow and manual can now be assisted by intelligent systems that work at scale.

Attackers use AI to gather publicly available information, understand how systems behave, and identify potential weaknesses faster. Large language models can generate phishing emails, fake support messages, or social engineering scripts that sound natural and believable. This makes attacks harder for people to recognize and increases the chances of success.

AI is also used to automate repetitive tasks such as scanning for vulnerabilities or modifying malicious code to avoid detection. The result is not necessarily more sophisticated attackers, but faster ones. Attacks can be launched more frequently, adjusted quickly, and repeated across multiple targets with minimal effort. This shift is one of the main reasons cybersecurity practices are changing today. It is also accelerating the adoption of AI in ethical hacking to counter rapidly evolving threats.

| ■ Case Study: Microsoft Tay Chatbot Failure |

| When Microsoft launched the Tay chatbot on Twitter in 2016, it was designed to learn from public interactions, but within hours malicious users manipulated its responses, forcing the bot to generate offensive and inappropriate content and ultimately shutting it down. The incident exposed a critical AI weakness: systems that learn from uncontrolled inputs can be rapidly influenced in unintended ways, showing why AI must be tested against adversarial behavior before public release rather than only under normal usage conditions. |

How Ethical Hackers Use AI for Defense and Penetration Testing

Ethical hackers are adapting by using AI in the same way attackers do — to move faster and handle complexity that humans alone cannot manage efficiently. Modern systems generate enormous amounts of data and interactions, and manual testing alone is no longer practical. This evolution reflects the practical rise of ethical hacking with AI across real-world environments.

AI helps ethical hackers during early testing stages by organizing information, analyzing system responses, and highlighting areas that deserve closer attention. Instead of spending time searching blindly, security professionals can focus on validating real risks and understanding how an attacker might move through a system.

Another major use of AI is in analyzing logs and system activity. Security teams deal with continuous streams of data, and important signals are easy to miss. Machine learning helps identify unusual patterns or behavior that may indicate a vulnerability or ongoing attack, demonstrating the expanding role of machine learning in ethical hacking, allowing faster investigation and response.

AI also supports code and API security testing by identifying common weaknesses and unexpected behavior across large applications. As systems become more interconnected, this allows ethical hackers to test environments more thoroughly without increasing testing time. AI does not replace expertise, but it makes security testing more practical in environments that continue to grow in size and complexity. For many organizations, AI in ethical hacking now enables security teams to maintain coverage at scale.

Ethical Hacking Against AI Systems and Large Language Models

As organizations begin relying on AI systems themselves, ethical hacking has expanded into a new area. The question is no longer only whether systems are secure, but whether the AI inside those systems can be manipulated. This growing concern has become central to modern large language model security strategies. Addressing this challenge is at the heart of modern AI security testing initiatives.

One common concern is prompt manipulation, a category of risk widely known as prompt injection attacks, where attackers try to influence AI responses through carefully written inputs. Ethical hackers test whether AI systems can be pushed into ignoring restrictions, exposing sensitive information, or producing outputs they were not meant to generate. These tests reveal how resilient an AI system really is outside controlled environments.

Data poisoning is another risk. Because AI systems learn from data, incorrect or intentionally altered data can change how a model behaves over time. Ethical hackers evaluate whether these systems can be influenced in ways that reduce accuracy or introduce harmful outcomes.

Security testing also includes checking for model theft and information leakage. AI models represent valuable intellectual property, and weak protections may allow attackers to extract information through repeated interaction or misuse. Adversarial testing further examines whether small changes to inputs can cause incorrect or unstable results. This field of study is commonly referred to as adversarial machine learning.

Finally, ethical hackers review how AI APIs are accessed and monitored. Poorly secured interfaces can allow attackers to misuse AI capabilities or extract data at scale. Testing these areas helps ensure AI systems remain reliable when exposed to real-world usage rather than ideal conditions. Achieving this reliability increasingly depends on structured AI in ethical hacking practices.

By testing AI systems directly, ethical hackers help organizations ensure that intelligent technologies remain safe, predictable, and secure as they become part of everyday operations.

| ■ Case Study: Deepfake-Enabled Financial Fraud |

| In a major corporate fraud incident, attackers used AI-generated voice cloning to impersonate a company’s chief financial officer during a virtual meeting, convincing employees to authorize a transfer exceeding $25 million before the deception was discovered. The attack demonstrated that AI threats are no longer limited to software vulnerabilities, as realistic synthetic media can exploit human trust and decision-making processes at scale making, forcing organizations to rethink verification processes alongside technical security controls. |

AI Red Teaming and the Future of Ethical Hacking

As AI systems move from experimentation into everyday business use, traditional security testing is no longer enough. AI does not fail in predictable ways like normal software. It reacts to inputs, learns from data, and behaves differently depending on how people interact with it. This has led to the rise of AI red teaming, where security professionals deliberately test how AI systems behave when exposed to misuse, manipulation, or unexpected situations before those weaknesses are discovered in the real world.

What AI Red Teaming Means

AI red teaming is the practice of intentionally challenging AI systems to understand how and where they break. Ethical hackers simulate realistic misuse scenarios, trying to confuse the system, bypass safeguards, or push it beyond its intended limits. The objective is not to damage the system but to expose failure points early. By observing how an AI responds under pressure rather than ideal conditions, organizations gain a clearer understanding of whether their safeguards actually work outside controlled environments.

Why Companies Are Hiring for It

Organizations are rapidly integrating AI into customer support, financial workflows, healthcare assistance, and internal decision-making systems. When these systems fail, the impact goes beyond technical errors. Incorrect outputs can lead to financial loss, reputational harm, compliance risks, or unsafe decisions. Because of this, companies increasingly need professionals who understand both cybersecurity and AI behavior, people who can test whether systems remain reliable when used in unpredictable ways and ensure that AI operates within defined boundaries.

AI vs AI Security Warfare

Cybersecurity is gradually moving toward a landscape where AI operates on both sides of the conflict. Attackers use AI to automate reconnaissance, generate convincing attacks, and adapt quickly, while defenders rely on AI to detect anomalies, analyze threats, and respond faster than manual processes allow. This creates a continuous cycle where intelligent systems are tested against one another. In this environment, ethical hackers become the deciding factor, interpreting results, identifying weaknesses, and ensuring defensive systems evolve faster than the threats they are designed to stop.

Skills Ethical Hackers Must Master in 2026

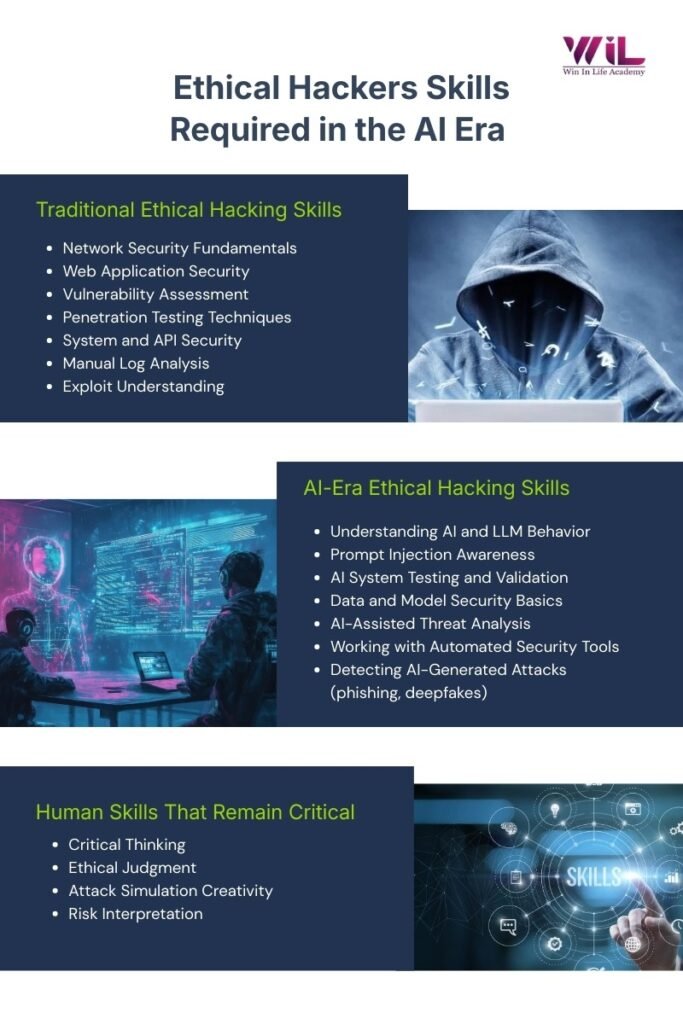

The skills ethical hackers need are expanding beyond traditional security knowledge as AI becomes part of modern systems. Future ethical hackers must understand how AI models make decisions, how data influences outcomes, and how outputs can be manipulated through prompts or unexpected inputs, while still maintaining strong foundations in networking, application security, and system architecture. They will increasingly work alongside AI-driven security tools, interpreting automated findings, validating risks, and distinguishing real threats from noise rather than relying only on manual testing.

As security shifts toward protecting intelligent systems as well as infrastructure, human judgment, creativity, and ethical responsibility remain critical, because AI can assist in detection and automation but cannot fully understand context, intent, or the consequences of failure in real-world environments.

Conclusion

AI has not replaced cybersecurity fundamentals, but it has changed how quickly attacks evolve and how complex modern systems have become. Ethical hacking today is no longer limited to finding technical flaws; which is why AI in ethical hacking has quickly become central to modern defense strategies, it requires understanding how AI systems behave, how they can be influenced, and how security must adapt when intelligent technologies become part of everyday operations. This shift is exactly why structured, industry-aligned training matters.

Programs like the CEH v13 AI training offered by Win In Life Academy focus on bridging traditional ethical hacking with AI-aware security practices, helping learners move beyond outdated approaches and prepare for the realities of modern cybersecurity environments. As AI continues to reshape digital systems, the ethical hackers who stay relevant will be those who understand both security fundamentals and the responsible use of intelligent technologies, ensuring innovation does not come at the cost of safety or reliability.

FAQ

1. What skills are required for ethical hacking in AI environments

Professionals need a mix of cybersecurity fundamentals, understanding of how AI systems behave, and the ability to analyze risks. Critical thinking, problem-solving, and the ability to simulate attacker behavior are essential.

2. Do ethical hackers need programming knowledge for AI security

Basic programming knowledge is highly valuable. It helps professionals understand system logic, automate testing tasks, and interpret how AI applications process inputs and generate outputs.

3. What technical background helps in learning AI-focused ethical hacking

A foundation in networking, operating systems, application security, and data handling is useful. Familiarity with APIs, cloud environments, and system architecture also supports effective testing.

4. What tools are commonly used in AI-assisted ethical hacking

Professionals use a mix of penetration testing tools, automation platforms, log analysis systems, and AI-enabled monitoring solutions. The exact tools vary depending on the environment being tested.

5. Are there special tools for testing AI models

Yes. Organizations increasingly use tools designed to evaluate AI behavior, detect unsafe outputs, and simulate misuse scenarios. These tools help validate that AI systems operate within defined boundaries.

6. What new capabilities should ethical hackers build for the future

Future-focused ethical hackers must understand AI decision processes, model limitations, and how outputs can be influenced. Working alongside automated systems while maintaining human oversight will be critical.

7. Is mathematics or data science mandatory for AI security roles

Deep mathematical expertise is not always required, but understanding how data affects AI behavior is important. Professionals should be comfortable interpreting patterns and evaluating reliability.

8. How important is understanding APIs in AI security

Very important. Many AI services are accessed through APIs, making them common entry points for misuse. Ethical hackers must know how to test access controls, usage patterns, and integration risks.

9. What soft skills are important for ethical hackers working with AI

Communication, documentation, and ethical judgment are vital. Professionals must explain complex risks clearly to decision-makers and recommend responsible actions.

10. How can someone start preparing for AI-related ethical hacking roles

Start by building strong cybersecurity fundamentals, then learn how AI systems are deployed in real environments. Practice evaluating behavior, not just system access, and stay updated with emerging risks.